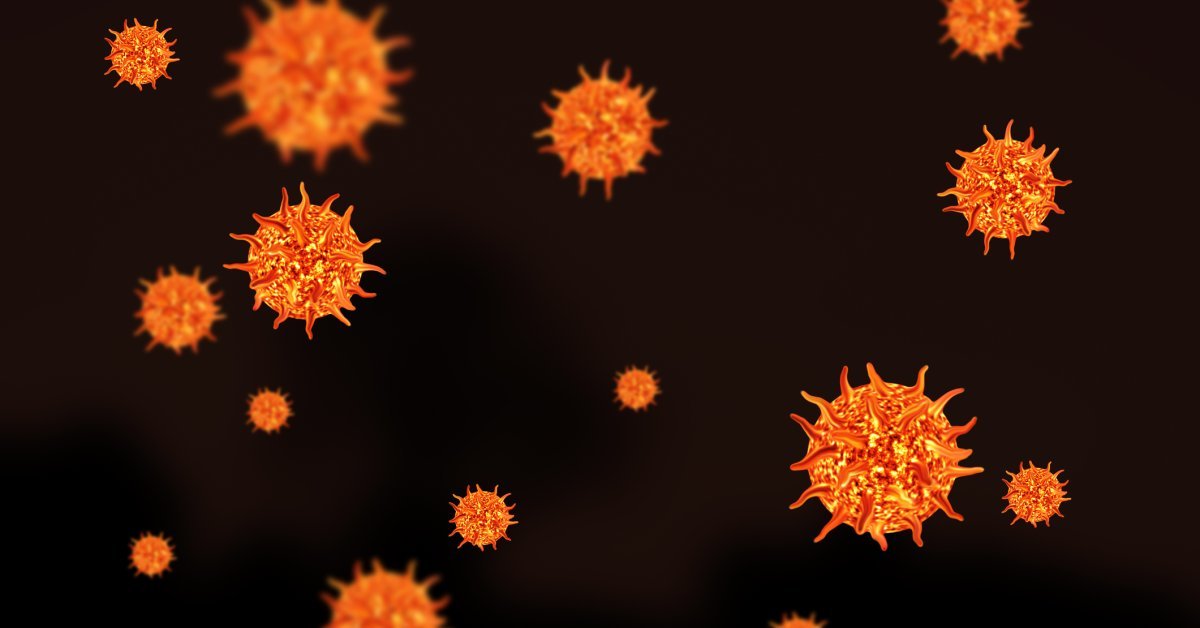

Rapid AI advancements heighten biosecurity risks, urging stronger safeguards

A new study suggests that artificial intelligence could significantly increase the likelihood of pandemics, posing a global health challenge.

Artificial intelligence (AI) could amplify the risk of pandemics by up to five times, according to a groundbreaking study from the University of Oxford and Stanford University.

Published on August 12, 2025, the research highlights how AI’s accessibility and power could enable malicious actors to engineer deadly pathogens, while also offering potential solutions to mitigate these dangers.

AI’s Dual-Edged Sword

Empowering Malicious Actors

The study warns that AI tools, particularly large language models like ChatGPT and Grok, can simplify the creation of biological weapons.

By analyzing vast datasets, these models can guide users—potentially including non-experts—with step-by-step instructions to engineer pathogens. “

AI lowers the barrier for bioterrorism,” said Dr. Emily Harper, a co-author from Oxford. The research cites experiments where AI systems provided accurate guidance on synthesizing viruses, a task once limited to highly skilled scientists.

Accessibility Concerns

The proliferation of open-source AI models exacerbates the risk. Unlike restricted systems, open-source platforms like those from Meta AI are freely available, allowing anyone to access powerful tools without oversight.

The study estimates that by 2027, advancements in AI could make it five times more likely for a pandemic to emerge from engineered pathogens compared to natural outbreaks, based on current trends.

Also read:

AI tools like ChatGPT may alter brain engagement, MIT study suggests

Yahoo is warning:The steps to take

Potential for Good

AI as a Defense Tool

Despite the risks, AI also offers solutions. The study highlights its potential to enhance pandemic preparedness, such as accelerating vaccine development and predicting outbreak patterns.

For instance, AI models analyzed during the research identified vulnerabilities in existing biosecurity protocols, suggesting improvements. “AI can be a shield if we use it wisely,” said Dr. James Patel, a Stanford co-author.

Collaborative Efforts

The researchers advocate for partnerships between AI developers, governments, and health organizations to harness AI’s benefits.

Projects like the AI-driven Global Pathogen Surveillance Network, launched in 2024, demonstrate how AI can monitor emerging threats in real-time, potentially averting disasters.

Call for Regulation

Urgent Need for Oversight

The study urges immediate regulatory action to curb AI misuse. It proposes mandatory safety protocols for AI developers, including “red-teaming” exercises to test models for harmful outputs and stricter controls on open-source AI distribution.

The EU’s AI Act, effective in 2025, is cited as a model, but global coordination is needed. “Without unified rules, we’re playing a dangerous game,” Dr. Harper warned.

Balancing Innovation and Safety

Regulating AI without stifling innovation is a challenge. The study notes resistance from tech firms, citing debates like the Musk-Altman feud over AI ethics (per recent X posts).

Yet, public concern, amplified on platforms like X, is pushing for accountability, with users demanding transparency in how AI models are developed and deployed.

Broader Implications

A Global Wake-Up Call

The research underscores the urgency of addressing AI-driven biosecurity risks as global health systems remain vulnerable post-COVID-19.

The 2025 MIT study on AI’s cognitive impacts (referenced in recent discussions) suggests over-reliance on AI could dull critical thinking, complicating efforts to discern real threats.

The Oxford-Stanford findings call for a proactive approach to safeguard humanity.

Societal and Ethical Stakes

The potential for AI to both cause and prevent pandemics highlights a broader ethical dilemma: how to balance technological progress with societal safety.

As AI reshapes industries—from finance (e.g., Bank of America’s stablecoin plans) to cybersecurity (e.g., CAPTCHA evolution)—its role in biosecurity demands urgent attention to prevent catastrophic misuse.

The content is paraphrased under fair use principles for educational and informational purposes, with full attribution to the original source.

Source link